AI Hardwares

Central Processing Units (CPUs), Graphics Processing Units (GPUs), Field-Programmable Gate Arrays (FPGAs), Application-Specific Integrated Circuits (ASICs), Neural Processing Units (NPUs)

AI Hardware: The Engine Behind Artificial Intelligence

Artificial intelligence (AI) is rapidly transforming the world, from self-driving cars to personalized healthcare. While the software side of AI gets a lot of attention, the hardware powering it is equally crucial. AI hardware is the physical infrastructure that allows AI algorithms to run efficiently and effectively.

AI Hardwares

AI hardware encompasses a wide range of components, each playing a vital role in enabling AI applications. Let's dive into the key players:

-

Central Processing Units (CPUs):

Traditional CPUs are the workhorses of general-purpose computing. While they can handle some AI tasks, they are not optimized for the demanding computations involved in AI algorithms like deep learning. However, modern CPUs with specialized AI instructions can still be effective for simpler AI tasks or as a complement to specialized hardware.

-

Graphics Processing Units (GPUs):

GPUs were originally designed for rendering graphics in video games and other applications. Their parallel processing capabilities, with thousands of cores, make them exceptionally well-suited for the massive matrix operations required in deep learning. GPUs have become the dominant hardware for training and deploying AI models.

-

Field-Programmable Gate Arrays (FPGAs):

FPGAs are customizable hardware devices that can be programmed to perform specific tasks. They offer a balance between flexibility and performance, making them suitable for both training and inference in AI applications. However, programming FPGAs can be complex and requires specialized skills.

-

Application-Specific Integrated Circuits (ASICs):

ASICs are designed for a specific purpose and offer the highest performance and efficiency. They are often tailored to specific AI algorithms or tasks, making them ideal for large-scale deployments. However, their development and manufacturing are expensive and time-consuming, making them suitable for high-volume or critical applications.

-

Neural Processing Units (NPUs):

NPUs are specialized hardware chips designed specifically for AI workloads. They aim to provide even greater efficiency and performance than GPUs, especially for tasks like natural language processing and image recognition. Examples include Google's Tensor Processing Unit (TPU) and Intel's Neural Compute Stick.

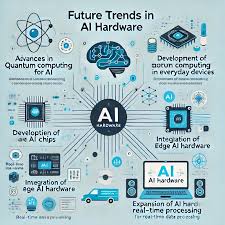

The choice of AI hardware depends on factors like the specific AI application, the size and complexity of the models, the desired performance, and budget constraints. As AI continues to evolve, we can expect further advancements in hardware, enabling even more powerful and efficient AI systems.

Summary

- AI hardware is essential for running AI algorithms efficiently.

- GPUs are currently the dominant hardware for AI, but other specialized hardware like FPGAs, ASICs, and NPUs are gaining traction.

- The choice of AI hardware depends on specific application requirements and constraints.

- Advancements in AI hardware are crucial for pushing the boundaries of AI capabilities.