A comprehensive guide to setting up and managing a Kubernetes cluster efficiently.

Ever wondered how to build a powerful, scalable, and robust Kubernetes infrastructure right on your Linux system? Prepare to unravel the mystery of Kubernetes cluster setup—from initial configuration to deploying your applications. This deep dive promises to empower you with the knowledge to create your own Kubernetes infrastructure!

Kubernetes Infrastructure Setup: A Deep Dive

Prerequisites: Setting the Stage for Kubernetes

System Requirements: Checking the Minimum Specifications :-

- Before embarking on your Kubernetes journey, ensure your Linux system meets the minimum hardware and software requirements. A robust system ensures smooth operation and prevents performance bottlenecks. Insufficient resources can lead to instability and hinder your ability to effectively manage your Kubernetes cluster. Check the official Kubernetes documentation for the most up-to-date requirements, which usually include sufficient RAM, CPU cores, and disk space, tailored to your specific deployment strategy (single-node, multiple nodes, etc.). Failing to meet these requirements can result in errors during installation and deployment. It is strongly advised to have a dedicated machine with ample resources to avoid resource contention with other processes.

- Furthermore, consider the long-term scalability needs of your cluster. Starting with a system that can comfortably handle anticipated workload growth is a smart move. You don't want to be constantly upgrading your hardware mid-deployment. Planning ahead will save you time, effort, and potential headaches down the line. Remember, Kubernetes is designed for scalability, but this only works effectively if your underlying infrastructure is properly provisioned.

Essential Packages: Ensuring Compatibility and Smooth Operation

- Before installing Kubernetes, ensure that all necessary packages are present on your Linux distribution. These packages often include crucial utilities and dependencies required for the smooth functioning of Kubernetes. The specific packages required can vary based on your distribution. Use your distribution's package manager (like

apton Debian/Ubuntu oryumon CentOS/RHEL) to install these dependencies. These might include things like container runtime tools (like Docker, containerd, or cri-o), networking tools, and other system utilities. - Failure to install these packages can result in installation failures or runtime errors within the Kubernetes cluster. Before starting the installation, double-check the required dependencies for your specific Kubernetes version and Linux distribution to avoid encountering unexpected issues. A systematic approach to package installation and verification is critical for a successful Kubernetes deployment. Regular updates to these packages also ensure improved security and performance.

Setting Up the Network: Establishing Seamless Communication :-

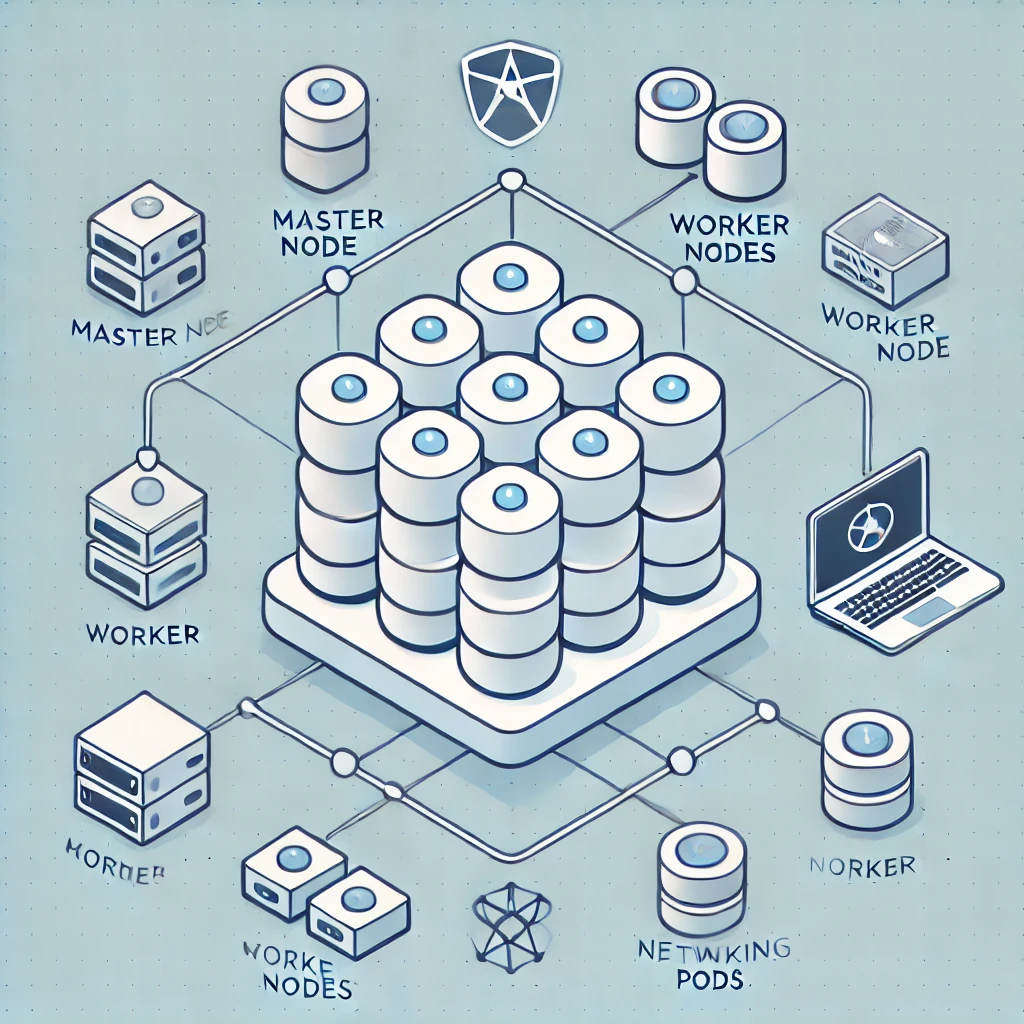

- Proper network configuration is paramount for a functional Kubernetes cluster. Kubernetes relies heavily on network communication between nodes (master and workers) and pods. You need to ensure that all nodes within the cluster can communicate seamlessly with each other. This typically involves configuring network settings such as IP addresses, DNS resolution, and firewall rules. Incorrect network configuration will result in connectivity issues.

- Consider using a dedicated virtual network or VLAN for your Kubernetes cluster to isolate it from other network traffic. This improves security and minimizes the risk of interference. If you're using a cloud provider, leverage their managed networking services to simplify the configuration process. Understanding network policies, such as NetworkPolicies in Kubernetes, is crucial for advanced network security and control. Testing network connectivity between nodes is a key step before deploying applications.

Installing Kubeadm: The Foundation of Your Kubernetes Cluster

Downloading Kubeadm: Accessing the Latest Version

- Kubeadm is the recommended tool for bootstrapping a Kubernetes cluster. Begin by downloading the latest stable version of Kubeadm from the official Kubernetes website or using your distribution's package manager. The specific commands will differ slightly depending on your distribution. Always verify the checksum of the downloaded package to ensure its integrity and avoid potential security risks. Downloading from untrusted sources can lead to critical security vulnerabilities within your cluster.

- Once downloaded, verify the integrity of the downloaded package. This is a critical security step to prevent malicious code from compromising your system. Use the checksum provided on the official Kubernetes website to compare with the checksum of the downloaded package. This ensures that the package hasn't been tampered with during the download process. Tools like

sha256sumor similar can be used for this purpose. Using the latest version of Kubeadm is recommended for optimal performance and access to the latest features and bug fixes.

Installation Process: A Step-by-Step Guide

- The installation process for Kubeadm is relatively straightforward. Consult the official Kubernetes documentation for the most accurate and up-to-date instructions. These instructions usually involve using your distribution's package manager to install Kubeadm. Make sure your system is updated before proceeding. A simple update command followed by a system reboot is often advisable to prevent conflicts and ensure a clean installation.

- Following the installation, you will typically need to initialize the master node. This involves running the

kubeadm initcommand. This command will set up the necessary Kubernetes components on the master node, including the control plane and etcd. The exact commands and options might vary depending on your chosen configuration and setup. Always refer to the official Kubernetes documentation for the most accurate and up-to-date instructions for your specific version and distribution.

Verification: Ensuring Successful Installation :-

- After installing Kubeadm and initializing the master node, it is crucial to verify the successful installation. This verification process usually involves checking the status of the control plane components and ensuring all components are running as expected. The output of the initialization command usually provides basic status information. Further checks might involve using kubectl to view the status of various Kubernetes components.

- Running

kubectl get nodesis a common verification command. This command will display the status of all nodes in the cluster, indicating whether they are ready and functioning correctly. Any errors during the initialization or verification processes should be addressed immediately. Carefully review the logs for any clues to diagnose and resolve any potential problems. Troubleshooting steps are usually well-documented in the Kubernetes documentation.

Initializing the Kubernetes Cluster: Bringing It All Together

The Initialization Process: Preparing the Master Node :-

- The initialization process uses the

kubeadm initcommand. This command sets up the control plane components on your master node. These components are essential for managing the cluster and coordinating operations across worker nodes. This step involves setting up etcd, the Kubernetes API server, the scheduler, and the controller manager. The process will generate a kubeconfig file, which you'll need to manage the cluster. - During the initialization, Kubeadm will configure the necessary networking components, including the kube-proxy, a network proxy that manages network rules within the cluster. The initialization process might take some time, depending on your system's resources. Monitor the process carefully for any errors. The output provides important information and potential troubleshooting steps. Ensure you understand the options available to customize the initialization process for your needs.

Joining Worker Nodes: Expanding the Cluster :-

- Once the master node is initialized, you can add worker nodes to expand your cluster's capacity. Each worker node runs Kubernetes components that execute pods and applications. To add a worker node, you need to use the join command that Kubeadm outputs during the initialization process. This command includes a token and a discovery address, which allows the worker node to securely join the cluster.

- The join command typically involves running

kubeadm join, followed by the token and discovery information provided during the master node initialization. This process establishes communication between the worker nodes and the master node, allowing them to participate in the cluster's functionality. Ensure that all worker nodes meet the same system requirements as the master node. Proper network connectivity between all nodes is essential for a functional cluster.

Post-Initialization Checks: Validation of the Cluster :-

- After adding worker nodes, perform thorough post-initialization checks. Verify that all nodes are communicating properly and are ready to accept workloads. Use

kubectl get nodesto check the status of all nodes. All nodes should be in aReadystate. Any nodes that aren't ready indicate potential problems that need immediate attention. - Inspect the logs on both the master and worker nodes for any errors or warnings. These logs can provide valuable insights into potential issues within the cluster. If you encounter problems, consult the Kubernetes documentation and troubleshooting guides for solutions. Regular health checks are crucial for ensuring the smooth operation and stability of your Kubernetes cluster.

Deploying Applications: Putting Kubernetes to Work

Containerization Basics: Understanding Docker and Images :-

- Before deploying applications, it's crucial to understand containerization. Docker is a popular containerization technology that creates isolated environments for running applications. Docker images are pre-built packages containing everything needed to run an application. Using Docker images simplifies application deployment and ensures consistency across environments.

- Understanding Docker concepts like images, containers, and registries is essential before working with Kubernetes. Familiarize yourself with Docker commands such as

docker build,docker run, anddocker push. These commands allow you to build, run, and share Docker images. Properly building and managing Docker images ensures the efficient and reliable deployment of applications within your Kubernetes cluster.

Creating Deployments: Managing Application Instances :-

- Kubernetes Deployments are the primary way to manage application instances. Deployments define the desired state of your applications, and Kubernetes automatically ensures that the desired state is maintained. This includes handling scaling, updates, and rollbacks. A simple Deployment specification involves defining the number of desired replicas, the Docker image to use, and the resource requirements of the application.

- You can use

kubectl create deploymentto create a deployment. The command requires a YAML file specifying the deployment details. This YAML file includes the name of the deployment, the image to use, the number of replicas, and any other necessary configuration options. Understanding how to write these YAML files is crucial for deploying applications effectively within your Kubernetes cluster. Kubernetes automatically handles the creation, scaling, and management of application instances.

Managing Services: Exposing Applications to the Outside World :-

- Kubernetes Services provide a stable and consistent way to access applications running within your cluster. They act as an abstraction layer over the underlying Pods, providing a stable IP address and DNS name, even if the underlying Pods are restarted or scaled. This is crucial for external access to your applications.

- Different types of Services exist, each catering to different access patterns: ClusterIP, NodePort, LoadBalancer, and Ingress. Choosing the right type depends on your specific needs and infrastructure. Understanding how to define and manage these services is crucial for exposing your applications to the outside world. Proper configuration allows you to access your applications reliably, regardless of changes within the cluster.

Troubleshooting and Maintenance: Ensuring Continuous Operation

Common Issues: Addressing Frequent Problems :-

- Troubleshooting is a crucial part of managing a Kubernetes cluster. Common issues include connectivity problems, resource constraints, and deployment failures. Understanding the common causes of these problems and how to diagnose and resolve them is essential for maintaining a healthy cluster.

- Using tools like

kubectl describeto get detailed information about Pods, deployments, and services helps pinpoint problems. Inspecting logs, both Kubernetes system logs and application logs, helps in diagnosing issues. Careful monitoring is key to early identification and resolution of potential problems before they escalate.

Monitoring and Logging: Tracking System Health :-

- Implementing a comprehensive monitoring and logging solution is critical for ensuring the ongoing health and stability of your Kubernetes cluster. Monitoring tools provide real-time insights into the cluster's resource usage and performance, enabling proactive problem detection and resolution. Centralized logging aggregates logs from various components, facilitating easier troubleshooting and analysis.

- Tools like Prometheus and Grafana provide powerful monitoring capabilities, while tools like Elasticsearch, Fluentd, and Kibana (EFK stack) offer robust centralized logging solutions. Integrating these tools provides a holistic view of your cluster's health, allowing for proactive problem identification and improved operational efficiency. This proactive approach to monitoring and logging is essential for maintaining a reliable and high-performing Kubernetes environment.

Cluster Scaling: Adjusting Capacity as Needed :-

- Kubernetes provides effortless scaling capabilities, allowing you to easily adjust your cluster's capacity to meet changing demands. Horizontal scaling involves adding more worker nodes to increase the cluster's capacity. Vertical scaling involves increasing the resources (CPU, memory) of existing nodes. The optimal scaling strategy depends on your workload and budget.

- Kubernetes automates many aspects of scaling, including managing application deployments and resource allocation across nodes. Tools and techniques exist for both manual and automated scaling, ensuring efficient utilization of resources while responding to changing workloads. Proper scaling management is essential for maintaining optimal performance and cost-efficiency in your Kubernetes environment.